TL;DR

Generative AI is helping developers ship code faster than ever. But faster code output means more vulnerabilities, more scanner findings, and more AI-generated “fixes” that create new issues. The result is a costly feedback loop. The way out is not more scanning – it is shifting AI-powered security left, embedding it directly into the development workflow before insecure code ever gets written.

The Productivity Trap Nobody Talks About

Here is the story playing out at every engineering org right now: developers adopt AI coding assistants, their output doubles or triples, and leadership celebrates the productivity gains.

What nobody celebrates – because it shows up at a later stage – is the corresponding spike in security findings.

More code means more attack surface. More AI-generated code means more patterns that look correct but contain subtle misconfigurations, insecure defaults, or logic flaws that a human developer might catch through experience. AI-generated code is not inherently less secure than human code, but the sheer increase in code volume amplifies existing security weaknesses in development processes.

This is not a theoretical risk. Security teams across the industry are reporting noticeable increases in vulnerability findings as AI-assisted development ramps up. The code compiles. The tests pass. The security scanner lights up like a Christmas tree.

The Feedback Loop That Eats Your Sprint

Here is where it gets worse. When scanners flag these vulnerabilities, what do developers do? They ask the AI to fix them.

The AI generates a patch. Sometimes it is correct. Sometimes it introduces a different vulnerability. Sometimes it addresses the scanner finding without addressing the underlying security flaw – passing the check while leaving the risk intact.

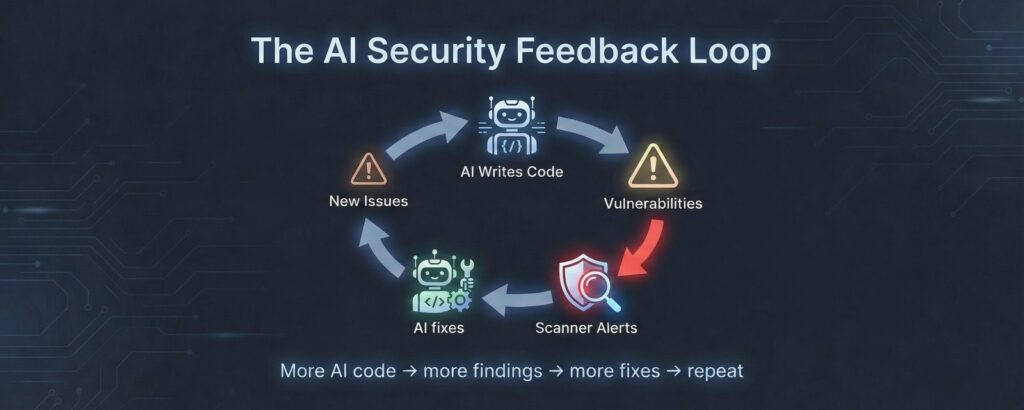

This creates a feedback loop:

- AI writes code with insecure patterns

- Security scanners detect the issues

- Findings get reported to developers

- Developers use AI to remediate

- AI generates fixes that may introduce new issues

- Back to step 2

Each cycle burns time, erodes trust between security and engineering teams, and – worst of all – creates a false sense of progress. Ticket counts go down. Actual security posture barely moves.

The organizations stuck in this loop share a common trait: they treat security as a gate at the end of the pipeline rather than a guardrail throughout it.

Why Traditional “Shift Left” Is Not Enough

You have heard “shift left” for a decade. Move security earlier. Scan in CI. Train developers. The concept is solid, but the execution has mostly meant “run the same scanners earlier in the pipeline.”

That does not work when AI is generating code at volume.

Traditional shift-left assumes developers write code, then security tools check it. The intervention point is between writing and shipping. But when AI generates code in real time, the intervention point needs to be between the AI and the developer – before the code even enters the codebase.

The fundamental problem: scanning for vulnerabilities after code is written is like spell-checking after you have printed the book. You catch errors, but the cost of fixing them is already baked in.

What AI-Native DevSecOps Actually Looks Like

Breaking the feedback loop requires rethinking where and how security interacts with AI-assisted development. Not as a blocking gate. As an embedded partner.

Here is what that looks like in practice:

1. AI Security Checks at the Point of Generation

Instead of scanning code after it is committed, embed security validation into the AI coding workflow itself. When a developer accepts an AI suggestion, a lightweight security model should evaluate it before it hits the file or, with the modern AI coding tools, add relevant skills to write secure code. Modern AI coding tools can also enforce secure development “skills” or rules. For example, just as a skills file might require the AI to add clear comments to generated code, it can also require security defaults such as always using parameterized queries for SQL, never constructing queries via string concatenation, and following approved authentication patterns. This shifts security from late detection to built-in generation-time guardrails.

This is not about blocking suggestions. It is about annotating them. Flag the insecure default. Suggest the secure alternative. Let the developer make an informed choice in the moment.

What changes: Developers see security feedback/improved code in their IDE, at the speed of thought, not in a Jira ticket days later.

2. Real-Time AI Security Guidance

Think of this as a security-focused copilot running alongside the coding copilot. When a developer is writing authentication logic, the security model surfaces relevant patterns – not generic OWASP links, but context-aware guidance specific to the codebase, framework, and threat model.

The best implementations do this transparently:

- Autocomplete intercept: Before an AI suggestion is shown, it passes through a security filter

- Contextual warnings: “This S3 bucket configuration allows public access – here is the secure version”

- Framework-aware rules: Different guidance for Django vs. Express vs. Spring, tuned to each framework’s common pitfalls

What changes: Security knowledge is embedded in the workflow, not locked in a wiki nobody reads.

3. Intelligent Prioritization Over Vulnerability Noise

Not all findings are equal. AI can help security teams separate signal from noise by correlating findings with:

- Reachability analysis: Is this vulnerable code path actually reachable from an external input?

- Exploit likelihood: Does this misconfiguration exist in a test environment or production?

- Business context: Is this a payment-processing service or an internal admin tool?

- Pattern recognition: Are these 47 findings actually one root cause repeated across AI-generated code?

The last point matters most in the AI era. When a coding assistant learns one bad pattern, it reproduces it everywhere. A smart prioritization layer recognizes that 50 “distinct” findings are really one fix applied in one place.

What changes: Security teams stop drowning in findings and start fixing what matters.

4. Prevention Over Detection

This is the real shift. Every dollar spent preventing insecure code from being written saves ten dollars finding it and a hundred dollars fixing it in production.

Prevention means:

- Secure code templates that AI assistants draw from instead of the open internet

- Organization-specific security policies encoded as AI-readable rules

- Pre-approved patterns for common security-sensitive operations (auth, crypto, data handling)

- Guardrails on AI code generation that make the secure path the path of least resistance

What changes: The feedback loop shrinks because fewer vulnerabilities are created in the first place.

The Organizational Shift This Requires

Technology alone does not fix this. The feedback loop persists partly because of how security and engineering teams interact.

Security teams need to stop measuring success by the number of findings. More findings do not mean better security – it often means the process is broken upstream. The metric that matters is mean time to secure code, not mean time to detect insecure code.

Engineering teams need to stop treating security fixes as busywork. When AI-generated code produces security debt, the response should not be “AI, fix this finding.” It should be “why is our AI generating insecure code, and how do we fix that at the source?”

Platform teams need to build the infrastructure that makes secure development the default path. Secure templates. Validated libraries. AI-integrated security tooling that developers actually want to use because it makes their work easier, not harder.

What to Do Monday Morning

If you are running a DevSecOps program and watching AI-generated findings pile up, here is where to start:

- Audit the loop: Track how many security findings originate from AI-generated code. If you do not measure it, you cannot fix it.

- Evaluate AI security tooling: Look at solutions that integrate security checks into the code generation step, not just the scanning step.

- Reduce your scanner noise: Implement finding deduplication and root cause analysis. Fifty tickets for the same pattern is not security – it is overhead.

- Invest in secure defaults: Build a library of approved, secure code patterns that your AI coding tools can reference. Make the right thing the easy thing.

- Measure prevention, not detection: Start tracking how many vulnerabilities were prevented versus found. That is the number that tells you whether your DevSecOps program is actually working.

The Bottom Line

Generative AI is not going away, and neither is AI-assisted coding. The organizations that figure out how to integrate security into this new workflow – not as a gate, but as an embedded capability – will ship faster and more securely than those still trapped in the scan-fix-scan loop.

The feedback loop is not an AI problem. It is a process problem that AI made visible. Fix the process, and AI becomes your biggest security asset instead of your biggest security liability.

The question is not whether to use AI in your development workflow. It is whether your security program is evolving as fast as your development practices. For most organizations, the honest answer is: not yet.